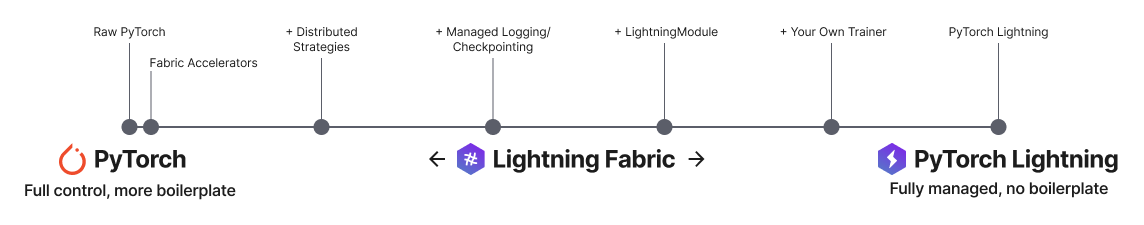

**Fabric is the fast and lightweight way to scale PyTorch models without boilerplate**

______________________________________________________________________

Website •

Docs •

Getting started •

FAQ •

Help •

Discord

[](https://pypi.org/project/lightning_fabric/)

[](https://badge.fury.io/py/lightning_fabric)

[](https://pepy.tech/project/lightning_fabric)

[](https://anaconda.org/conda-forge/lightning_fabric)

## Maximum flexibility, minimum code changes

With just a few code changes, run any PyTorch model on any distributed hardware, no boilerplate!

- Easily switch from running on CPU to GPU (Apple Silicon, CUDA, …), TPU, multi-GPU or even multi-node training

- Use state-of-the-art distributed training strategies (DDP, FSDP, DeepSpeed) and mixed precision out of the box

- All the device logic boilerplate is handled for you

- Designed with multi-billion parameter models in mind

- Build your own custom Trainer using Fabric primitives for training checkpointing, logging, and more

```diff

+ import lightning as L

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, Dataset

class PyTorchModel(nn.Module):

...

class PyTorchDataset(Dataset):

...

+ fabric = L.Fabric(accelerator="cuda", devices=8, strategy="ddp")

+ fabric.launch()

- device = "cuda" if torch.cuda.is_available() else "cpu

model = PyTorchModel(...)

optimizer = torch.optim.SGD(model.parameters())

+ model, optimizer = fabric.setup(model, optimizer)

dataloader = DataLoader(PyTorchDataset(...), ...)

+ dataloader = fabric.setup_dataloaders(dataloader)

model.train()

for epoch in range(num_epochs):

for batch in dataloader:

input, target = batch

- input, target = input.to(device), target.to(device)

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

- loss.backward()

+ fabric.backward(loss)

optimizer.step()

lr_scheduler.step()

```

______________________________________________________________________

# Getting started

## Install Lightning

**Fabric is the fast and lightweight way to scale PyTorch models without boilerplate**

______________________________________________________________________

[](https://pypi.org/project/lightning_fabric/)

[](https://badge.fury.io/py/lightning_fabric)

[](https://pepy.tech/project/lightning_fabric)

[](https://anaconda.org/conda-forge/lightning_fabric)

**Fabric is the fast and lightweight way to scale PyTorch models without boilerplate**

______________________________________________________________________

[](https://pypi.org/project/lightning_fabric/)

[](https://badge.fury.io/py/lightning_fabric)

[](https://pepy.tech/project/lightning_fabric)

[](https://anaconda.org/conda-forge/lightning_fabric)

______________________________________________________________________

# Examples

- [GAN](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/dcgan)

- [Meta learning](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/meta_learning)

- [Reinforcement learning](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/reinforcement_learning)

- [K-Fold cross validation](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/kfold_cv)

______________________________________________________________________

## Asking for help

If you have any questions please:

1. [Read the docs](https://lightning.ai/docs/fabric).

1. [Ask a question in our forum](https://lightning.ai/forums/).

1. [Join our discord community](https://discord.com/invite/tfXFetEZxv).

______________________________________________________________________

# Examples

- [GAN](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/dcgan)

- [Meta learning](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/meta_learning)

- [Reinforcement learning](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/reinforcement_learning)

- [K-Fold cross validation](https://github.com/Lightning-AI/lightning/tree/master/examples/fabric/kfold_cv)

______________________________________________________________________

## Asking for help

If you have any questions please:

1. [Read the docs](https://lightning.ai/docs/fabric).

1. [Ask a question in our forum](https://lightning.ai/forums/).

1. [Join our discord community](https://discord.com/invite/tfXFetEZxv).